Making sense of AI’s contradictions: Technology infrastructure

How will GenAI help companies to rethink their technology?

Artificial intelligence (AI) may help organizations modernize their legacy technology—and it may contribute to an increase in technical debt.

The AI revolution is in many ways a hardware revolution—and it has brought back ‘90s-era magnetic-tape storage.

Cloud computing is more important than ever—and AI is changing the cloud as we know it.

In the face of such contradictions, how should organizational leaders react? How do you implement AI when new AI tools and point solutions continue to be released, your organization is already dealing with technical debt, and machine learning (ML) systems present new opportunities to accrue more of it?

Questions about AI infrastructure, what’s needed to implement it at scale, and its various challenges—and idiosyncrasies—are becoming more pressing as organizations transition from smaller-scale AI experiments to real-life solutions used by employees and customers. But questions are good, and leaders looking to optimize the “I” in “ROI” as they begin to implement and scale AI solutions at their companies should be ready to explore them.

The CIO Paradox: When new AI apps meet old IT debts

What’s the matter?

In a Canva survey conducted by Harris Poll, 71% of CIOs reported they expected to adopt as many as 60 new applications in 2024, up from as many as 40 apps in 2022. Technology leaders are being asked to facilitate the adoption of these new apps—many of which will have AI capabilities—while also mitigating IT sprawl and legacy technical debt. In its coverage of the Canva survey, technology publication Runtime coined this phenomenon “the CIO Paradox.”

Slalom managing director of AI David Frigeri explains it this way:

“It’s the need to remediate and pay for some legacy technology upgrades to be able to support AI while at the same time being able to make progress going into the future with AI. It’s not one or the other, but both. How do our clients do both?”

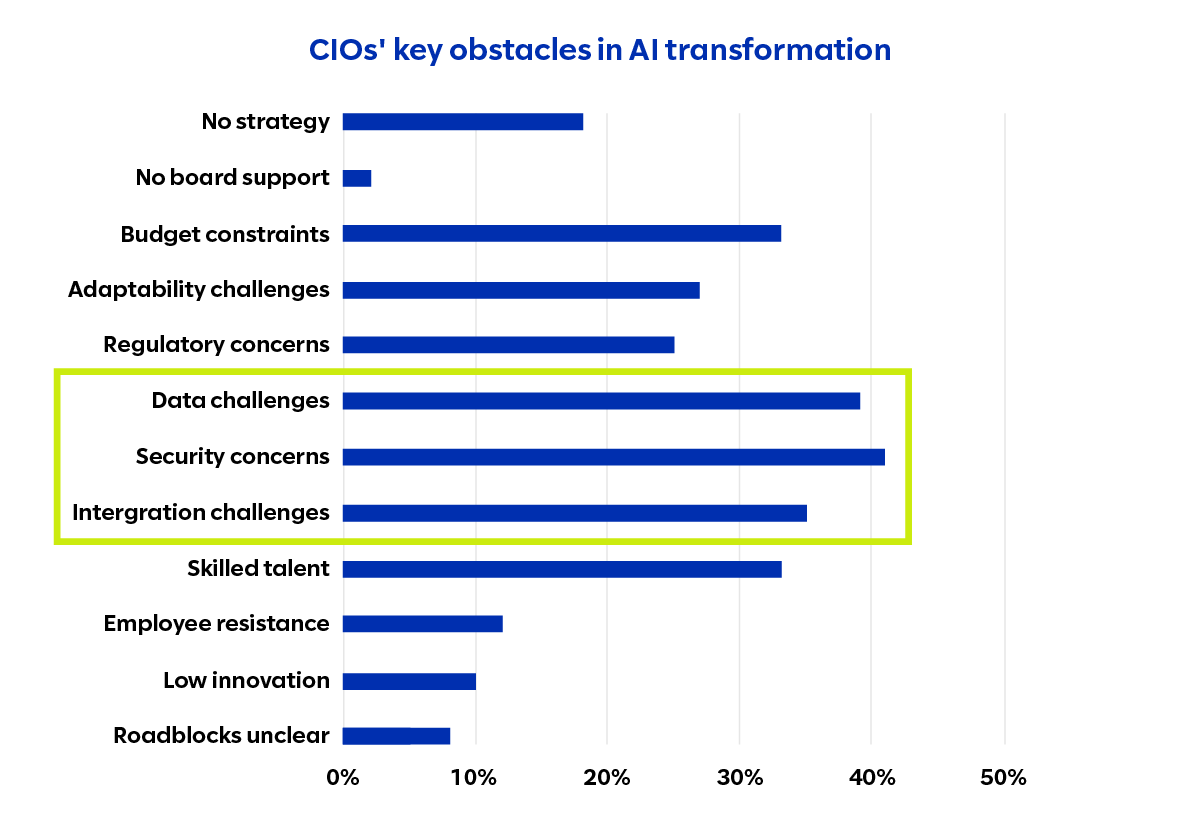

Since most engineering leaders are currently experiencing technical debt, the scenario Frigeri describes must be quite common. This may help explain why CIOs’ top three obstacles in AI transformation according to Slalom’s 2023 executive survey on AI transformation are all quite technical in nature: data challenges, security concerns, and integration challenges.

As if it wasn’t hard enough for organizations to reconcile AI implementation with existing technical challenges—from aging infrastructure to outdated code—a growing body of research shows that the ML systems enabling AI’s use can introduce new kinds of technical debt. According to one research paper, these new ML-specific types of technical debt include hidden feedback loops, undeclared consumers, data dependencies, configuration issues, and a variety of “system-level anti-patterns” such as “pipeline jungles.”

What’s a leader to do?

Technical debt is almost inevitable. “Our customers are always struggling with technical debt because that’s just the business that we’re in, especially if you’re talking about older manufacturing organizations,” says Adam Limoges, Slalom’s director of global partner development for data and AI. But it doesn’t need to be a struggle. Like financial debt can be a strategic tool for business growth, so can technical debt if organizations can manage it responsibly and accept it as a trade-off for speed. The goal shouldn’t be zero technical debt whatsoever; it should be zero unintentional technical debt.

The first objective toward that goal is identification. Experts such as analyst firm Gartner already consider generative AI (GenAI) to be a viable technology for identifying technical debt. We agree that AI is getting good at helping organizations identify not only technical debt but all opportunities to optimize their tech stack. “It’ll be interesting to see how we can actually tune AI from the infrastructure perspective, to tune it to our environment to say, ‘Tell me what I’m missing in my environment. Tell me where I can actually get cost benefits from running that gateway,’” says Rahul Grover, a director of platform engineering at Slalom.

In terms of managing and “paying off” technical debt, we recommend a variety of tactics, including dedicating 15% of each sprint to debt remediation and adjusting that amount based on delivery timelines, debt backlogs, and tolerance levels. Making time for technical debt management in every sprint embodies a “clean-as-you-go” mantra followed by true craftspeople in every walk of life. Soon, teams may also be able to resolve way more technical debt in their allotted sprint time, since there are already tools (powered by AI and GenAI) that can perform app upgrades and refactor functions. Even though these tools are still new and being refined, Gartner predicts that their use will be “common” by 2027 and will help reduce technology modernization costs by up to 70%.

When it comes to preventing and mitigating ML-related technical debt, leaders in the ML community advocate for practices like:

- Extensive documentation, done and discussed by humans. This includes documenting every proposed model change for analysis and evaluation.

- Consultation with domain experts during the model evaluation process, when there’s time to observe model behavior and recognize anomalies.

- Mitigating lock-in to any one model provider by accessing pretrained models through managed cloud services based on application programming interfaces (APIs).

Compute: Training, inference, and respecting what works

What’s the matter?

We are in a new era of computing. Parallel processing, graphics processing units (GPUs), and other computing innovations have significantly enhanced AI workloads, specifically the workloads used to train AI models. This has helped give rise to GenAI.

As incredible as these computing breakthroughs are, the cutting-edge chips used for training AI models are becoming less interesting to organizations as they graduate from training models (or selecting pretrained ones) to deploying them as parts of real-life solutions. More businesses are starting to focus their attention on the computing that happens after training: inference, the process of generating responses when an end user interacts with an AI model—a process that incurs ongoing computational costs.

If inference costs go overlooked, organizations risk underestimating the cost necessary to scale proofs of concept (POCs) into generally available solutions. “We’ve seen sticker shock after a POC is rolled out, because the team wants to scale it out from the small test group they did and they realize if they release that to the whole company that it’s an OMG moment,” says Thomas Edmondson, a principal consultant in Slalom’s Microsoft Data and AI practice.

What’s a leader to do?

Technology providers are starting to offer new solutions to meet the computing demands of running AI-powered apps at scale. Describing NVIDIA’s solutions in this space, Edmondson says that “the implementers of AI can start using NVIDIA’s hardware more directly and start seeing a cost reduction versus the traditional methods of using infrastructure even in the cloud.” But the cloud and these offerings don’t have to be mutually exclusive. Case in point: NVIDIA is allowing its new “inference microservices” to be used on organizations’ infrastructure of their choice, including the cloud platforms of the hyperscalers.

Even with the buzz about inference and new offerings like inference microservices, the future of computing won’t be wholly unfamiliar. That’s because the inference process resembles traditional computing much more than training. This means that traditional central processing units (CPUs) might work just fine for inference workloads. In this way, “legacy” technology may still have relevance in the AI technology stack, and how efficiently an organization uses AI will increasingly depend on whether it’s using the right models and computing resources for each specific use case and task, not necessarily the newest models and compute, or the most powerful.

Another example of this is the increase in demand for magnetic-tape storage. This storage method was introduced in the 1990s and was rumored to be “dying” or “dead” for years. With the rise of AI and GenAI, it became a top-favored solution for storing AI training data due to its cost-effectiveness and reliability over long periods. All of this goes to show that to meet AI’s diverse infrastructure needs, leaders will need to be curious about sophisticated new technologies while still paying due respect to older-yet-practical methods.

Have questions about your AI journey?

Cloud computing: Essential and evolving

What’s the matter?

AI services have long been offered by cloud providers. The rise of GenAI and the availability of pretrained models through cloud providers’ Model as a Service (MaaS) offerings have made the cloud feel even more important. As one research paper on the industrialization of AI describes, “Cloud platforms and their marketplaces play a central role in AI development and integration by offering infrastructure-as-a-service (e.g., compute capacity) and commodifying digital products (e.g., pre-trained models or software packages).”

But AI is also changing the cloud as we know it. Forrester’s analysis on the future of cloud technology highlights that “AI is transforming not only what services are available to cloud customers but how the cloud operates—and will ultimately transform what we mean by ‘cloud.’”

Forrester describes a future-state cloud that is much more “abstracted, intelligent, and composable” because AI will be interwoven across cloud providers’ entire portfolios, not just cloud services for AI/ML. “AI can be used to make cloud smarter yet less complex for the user,” per the analysis. “This is the foundation that supports abstraction and better platform operations enablement.”

What’s a leader to do?

Since AI is both supported by the cloud and making cloud use easier, organizations might benefit from thinking about cloud migration and modernization as part of their AI strategy. “If you have not done your cloud migration, now might be the time,” says Edmondson. While AI-enhanced cloud services could make migration easier and faster, cloud adoption will always require a degree of care. We agree with CIO.com’s perspective that “CIOs shouldn’t jump headfirst [into AI] without the right cloud environment and strategy.”

Cloud financial management and operations are key to having the right cloud environment and strategy for AI implementation. Unfortunately, the GenAI pricing model has arrived, bringing more complexity and more unpredictability in its nascency just as cloud financial operations (FinOps) have matured as a discipline. We think it’s safe to predict, however, that optimizing your AI investments—including those made through cloud services—will get easier as we collectively gain more experience doing so and as the pricing model itself gets hammered out across technology providers.

On that note, Jeff Kempiners, managing director of Slalom’s Amazon Center of Excellence (CoE), predicts the continued development and standardization of a tiered AI pricing structure. “Your simpler AI workloads aren’t going to cost as much as running it on sort of the providers’ top-tier stuff, and it’s logical to the way the rest of the cloud works,” says Kempiners. “You size according to your workload.”

Designing AI solutions for people

A lot remains unknown about the future, whether it’s the future of the cloud or our future with AI. While leaders should be interested in how AI influences infrastructure approaches and priorities, especially given infrastructure’s impact on cost, examining how AI influences people—and how we harness it to build better tomorrows—will always be at the top of our list. As Kempiners says, “The technology complexity is being solved more and more, but the people are complicated.” As a fiercely human business and technology consulting company, Slalom focuses on the people part of implementing emerging technology as much as the infrastructure part, and our customers fare better because of it. Learn more about our AI solutions and services.

Thank you to the following Slalom experts who helped inform this piece: Brent Adam, Ted Hunter, Sidney Harrison, Rob Koch, Ernest Kugel, Eric McElroy, Princess Palacios Reyes, Geoff Parris, Ian Russell-Lanni, and John Wright.

Thomas Edmondson and Geoff Parris are no longer with Slalom.