Making sense of AI’s contradictions: Developer workforce

Is working (and coding) faster than ever a boon or a risk of GenAI?

It has many names. Grunt work. Drudgery. Undifferentiated heavy lifting. It’s the type of work that slows employees down, tires them out, and wears away at their resolve to keep marching toward big goals that require many little steps.

At our Slalom Speaks employee event in 2023, DevOps consultant Andrew Marshall referred to it as toil. Marshall was recounting his time at a company where engineers needed to shut down development for two straight days to push any software updates into production. “When I saw people who were willing to put up with really painful processes just to be able to do the smallest things that they found interesting, it really made me sad,” said Marshall.

One of the promises of AI and especially GenAI is that it will help teams and organizations reduce or eliminate toil in many different areas, including software development. With a reported 95% of developers already using AI tools to write code, we’re starting to be able to tell whether that promise rings true. And the results are more nuanced than you might think.

The reward of AI coding tools: Speed

AI-powered developer assistant tools are helping organizations reduce development times by 50%. In a pilot Slalom facilitated with a healthcare customer, participants were able to create or delete an average of 156% more lines of code per minute using one such tool.

We’ve been involved in other experiments with AI coding tools that have seen teams double their sprint goals week over week. Our product engineering division, Slalom Build, has also integrated AI into its methodology, which has contributed to a 30% increase in team velocity and a 20%-30% reduction in project cost for customers.

Hearing feedback from customers that these tools can “do a lot of heavy lifting” brings to mind the term “infinite interns” that tech analyst Benedict Evans first coined to describe AI and machine learning (ML). While the benefits for developers with infinite interns may seem self-explanatory, it’s important to connect the more obvious developer benefits to the business outcomes they enable. The same article on Slalom Build’s blog that reports higher velocity and lower project costs with AI also outlines the following business outcomes from using these tools:

Accelerated time to market for new features and products

Quicker responses to market demands and opportunities

Enhanced agility and competitive edge

The potential for increased market share and revenue growth

As exciting as these outcomes are, the only organizations capable of achieving them are those that are as prepared for the risks of speed as they are for the benefits.

The risk of AI coding tools: Also speed

How fast is too fast? A more pointed version of that question comes from Joshua Gruber, a senior technology leader at Slalom Build: “If I was sprinting before and now I’m double-sprinting, do I need more time to catch my breath?” The question is thought-provoking, and the answer isn’t immediately clear. What we do know, however, is that the sheer speed of developers with access to AI tools can create its own set of issues.

Risks that come with this level of speed include bottlenecks at the testing phase due to developers outpacing quality assurance (QA) teams. This reminds us why developer forum Stack Overflow banned users from posting AI-generated responses back in December 2022, a “temporary” ban (which hasn’t yet changed) that was meant to alleviate pressure on its volunteer-based quality control infrastructure.

Whereas a growing body of research seems to consistently correlate AI coding tools with speed, findings seem more mixed regarding quality:

A code review software company reports a correlation between the release of AI coding tools and “downward pressure on code quality”

The maker of an AI coding assistant tool reports greater developer confidence in code quality with the use of its tool

Academic research suggests early evidence of a productivity increase among software engineers using AI coding tools, as measured by weekly pull requests completed—but not necessarily passing code review

At Slalom, we’re eager for more research on the relationship between AI coding tools and code quality. We’re also discovering firsthand how quality depends on much more than a tool’s mere availability; it depends on factors including the guardrails that an organization puts in place for the tool’s use, the training delivered to developers, and the support provided to QA teams and quality engineers. These dependencies reinforce why Gruber has written that development teams need to take at least as much care with AI-generated code as most of them do with the “two pairs of eyes” rule for human-generated code. We may have “infinite interns,” but we shouldn’t leave them unattended.

Jared Matfess, a senior director on Slalom’s global Microsoft team, made a different comparison to interns that speaks more directly to these limitations:

“GenAI is going to be like the intern that’s super eager to please people, that’s going to create some solution that’s not really scalable, because it’s not going to have the experience and know-how to do that.”

Navigating the nuance

Similar to AI itself, it turns out that quicker coding is not inherently all good or all bad. Even though faster development can mean faster time to market and rapid responses to market dynamics, it can also mean bottlenecks in the overall software delivery lifecycle (SDLC), lower-quality code, and more security vulnerabilities.

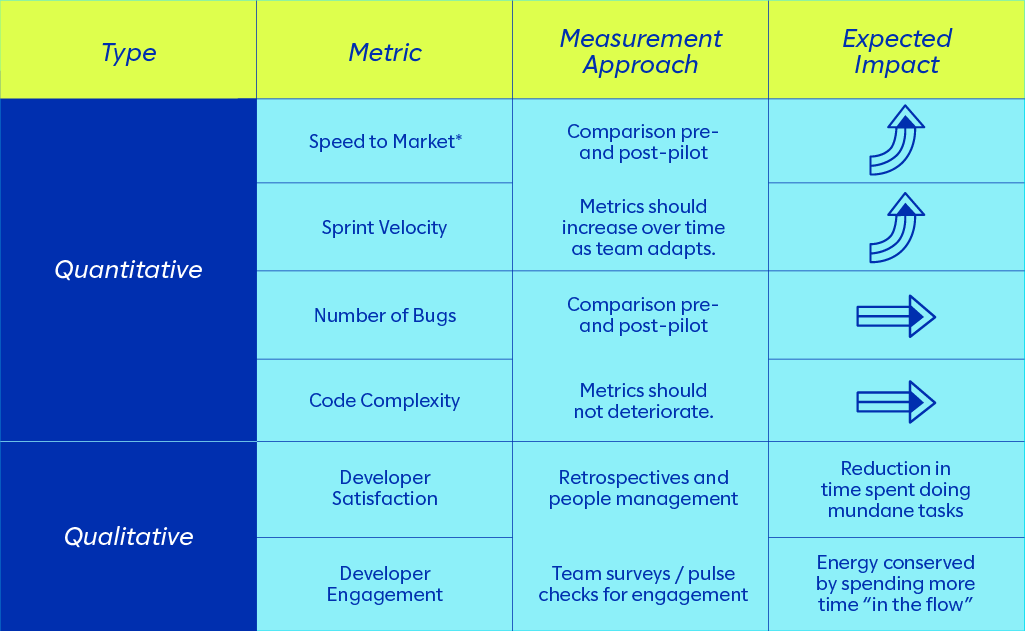

But we wouldn’t be integrating AI into our product engineering methodology if we didn’t think there was enough of an upside to outweigh the risks. The first step toward maximizing that upside is to set clear metrics that cover multiple dimensions, beginning with people and their satisfaction and well-being. Satisfaction is the first metric of the SPACE framework, a framework for measuring productivity that experts assert should be applied to engineering. In keeping with the SPACE framework and its multidimensionality, we recommend tracking developer satisfaction alongside speed-related metrics, and tracking those metrics alongside quality-related metrics like bug count and code complexity.

*Speed-to-market metric contingent on mitigating risks associated with AI coding tools and following best practices for adoption.

*Speed-to-market metric contingent on mitigating risks associated with AI coding tools and following best practices for adoption.

Another way to maximize AI’s positive impact in engineering and beyond is to train teams to use AI effectively. This entails shifts in thinking, behavior, and organizational structure when working with AI overall, not just coding assistants.

Have questions about your AI journey?

From knowledge retrievers to thought partners

AI chatbots are not search engines, yet many people treat them as such. One of Gruber’s favorite resources on this topic is an episode of the podcast You Are Not So Smart in which the guests discuss a cognitive bias called the Einstellung effect, where people prefer to solve problems using the methods they know, even if those methods aren’t the most effective.

More experienced developers tend to be more susceptible to the Einstellung effect due to the additional time they’ve had to become predisposed to certain ways of working, including searching the web for code snippets through interfaces that look deceptively like those of AI chatbots. So, when these developers start using chatbots, the Einstellung effect means they’re liable to use them like they’ve used search engines. But this conflation is nothing that proper training can’t address.

Describing the shift in how we should perceive chatbots, Gruber says, “You get a lot more out of these tools if you’re treating chatbots like people—I feel odd saying that—by having an interaction with them and not just asking for them to do something.”

A related skill that will benefit developers and other AI users is prompt engineering. Part art and part science, prompt engineering is the design and refinement of the inputs given to AI models with the goal of eliciting specific, high-quality outputs faster. We’re continuing to gain firsthand experience with prompt engineering for development, like when one of the 2023 Slalom Build hackathon teams built a browser extension that uses GenAI to simplify text for readers.

From order takers to system makers—and managers

AI-powered low- and no-code development tools are allowing more nontechnical employees to create software, giving rise to what we call “citizen developers.” But if everyone can develop, then what does it mean to be a professional developer?

According to one of our managing directors, David Gregg, it means thinking more about the big picture, or what he calls “systems thinking.” Now more than ever, organizations need employees capable of the higher-order thinking behind what software to build or code to write and of advising nontechnical employees with access to low- and no-code development tools.

Adds Gruber, “I think that GenAI concentrates developers on the stuff that we want from them and that they need to be thinking about as they rise up, as they become architects and higher: the end user, how this is used, how this fits into the software.”

From isolated experimentation to programmatic innovation

As developers and nontechnical employees alike weave AI into their ways of working, organizations that make structural changes—such as forming centralized teams dedicated to managing employees’ use of AI—stand to benefit the most and risk the least.

Matfess astutely describes the why and how of this shift: “GenAI is not a new product or capability. It’s a transformation. It’s a new way of working, and in order for it to be successful within your organization, you have to treat it like a long-running program. You have to have people and support continually helping to reinforce the new ways of thinking about your work and adopting these new capabilities, coming up with new KPIs, just reimagining certain aspects, and that’s the sort of secret sauce.”

Want to learn more about AI in engineering? Check out Slalom Build’s overview of AI-Accelerated Engineering. Learn more about our overall AI services at slalom.com/ai.

Jared Matfess is no longer with Slalom.